Relational RL

TL;DR: RRL combine Reinforcement Learning with Relation Learning or Inductive Logic Programming by representing states, actions and policies using a first order(relational) language.

Structure

Feature Extraction

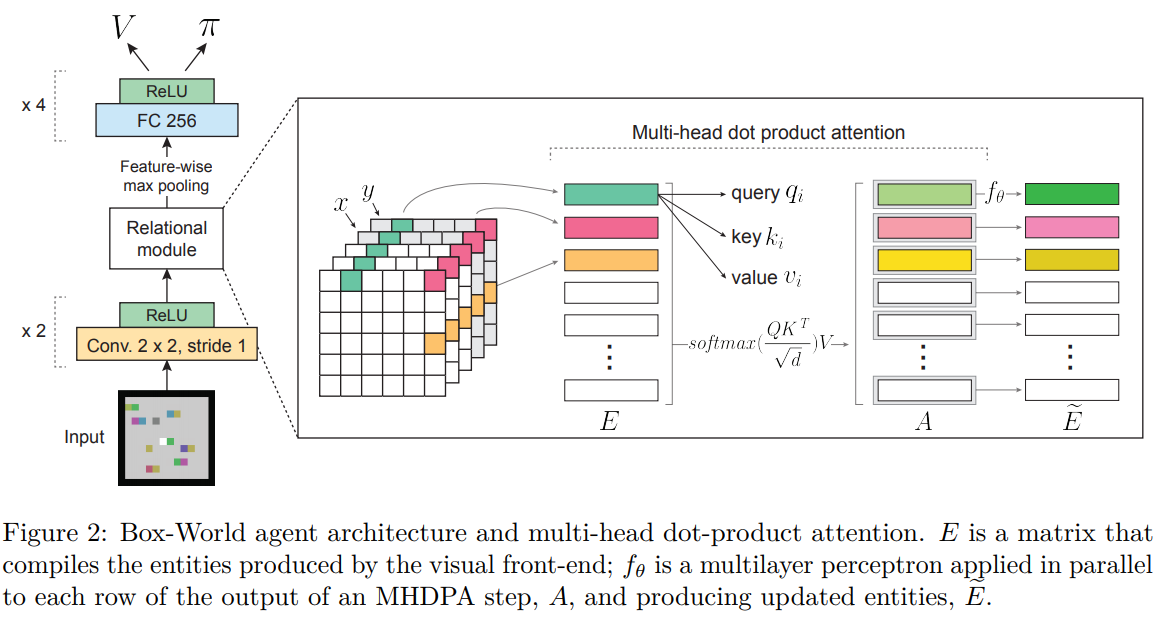

We apply a CNN on the raw image and get a feature map: where we have one $k$-dimension vector for each pixel(k is the number of output channels of CNN), the vector is then concatenated with x and y coordinates to indicate its position in the map.

We treat the resulting n2 pixel-feature vectors as the set of entities.

Relational Module

The output is then passed to the relational module, where we iteratively apply "attention block" on entity representations:

The attention block is the same as a Multi-Head Attention module in Transformer.

Policy and Value Network

The output of relational module is aggregated via a feature-wise max-pooling across space(n×n×k tensor to a k-dimensional vector), the feature is then used to produce value and policy for Actor-Critic Algorithm.

Task

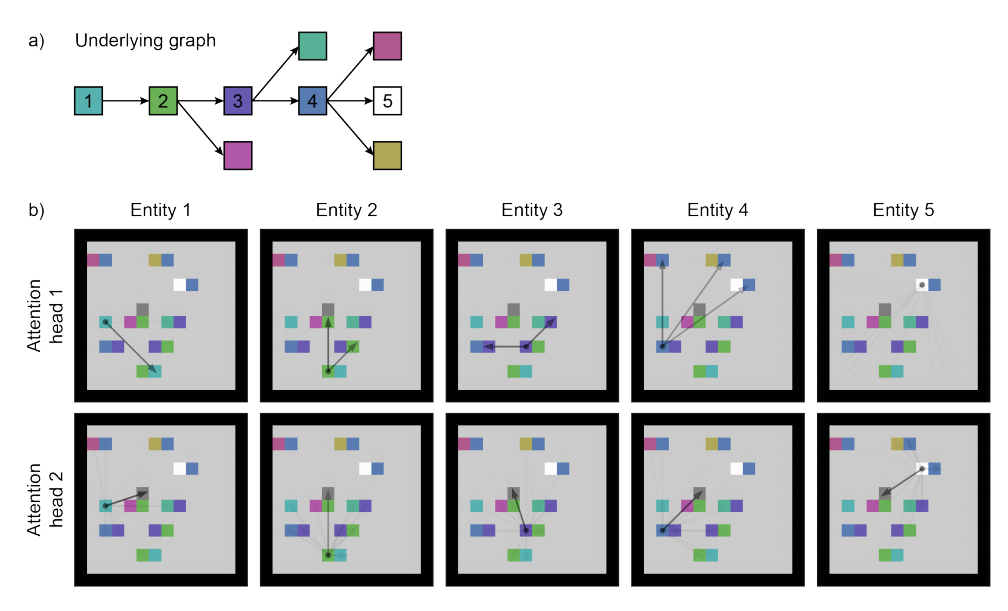

Box-World

Action: left, right, up, down.

pick up keys and open boxes(two adjacent colored pixels)

Agent could pick up loose keys(isolated colored pixel) and open boxes with corresponding locks.

Most boxes contain keys in then, and one box contain a gem(colored with white), the target of the game is to reach the gem.

Starcraft

Last updated