Pitfalls of Graph Neural Network Evaluation

Motivation

The authors intend to perform a fair evaluation of 4 prominent GNNs: GCN, MoNet, GraphSage, and GAT on node classification by:

using 100 random train/validation/test splits rather than a fixed train/validation/test split.

using a standardized training and hyperparameter tunning procedure for all models

Experiment

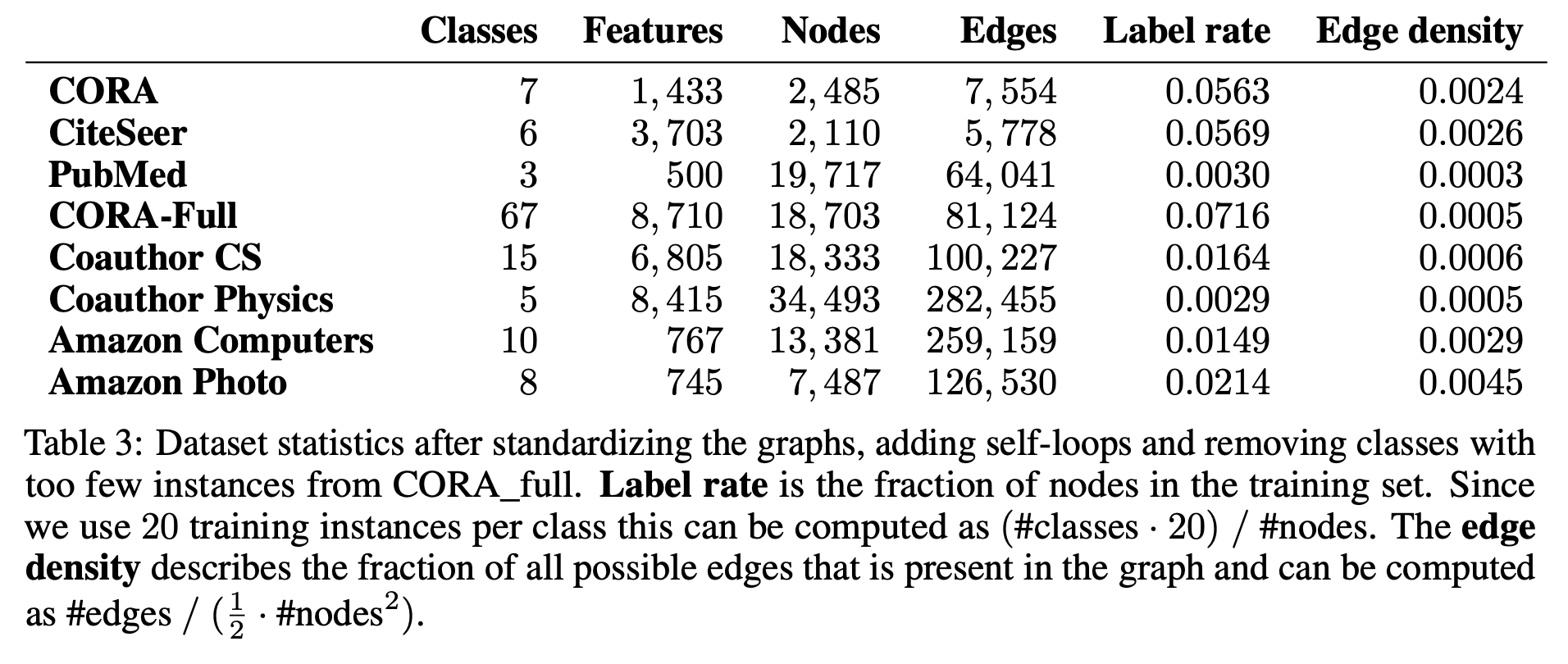

The authors used 8 datasets:

PubMed

CiteSeer

CORA

CORA-Full

Coauthor CS: co-authorship graphs based on the Microsoft Academic Graph from the KDD Cup 2016 challenge, where nodes are authors, edges indicate coauthorship, node features represent paper keywords for each author's papers, and class labels indicate most active fields of study for each author

Coauthor Physics: same as Coauthor CS

Amazon Computers: segments of the Amazon co-purchase graph, where nodes represent goods, edges indicate that two goods are frequently bought together, node features are bag-of-words encoded product reviews, and class labels are given by the product category

Amazon Photo: same as Amazon Computers

For all datasets, the authors treat them as undirected and only consider the largest connected component. The dataset statistics is included below:

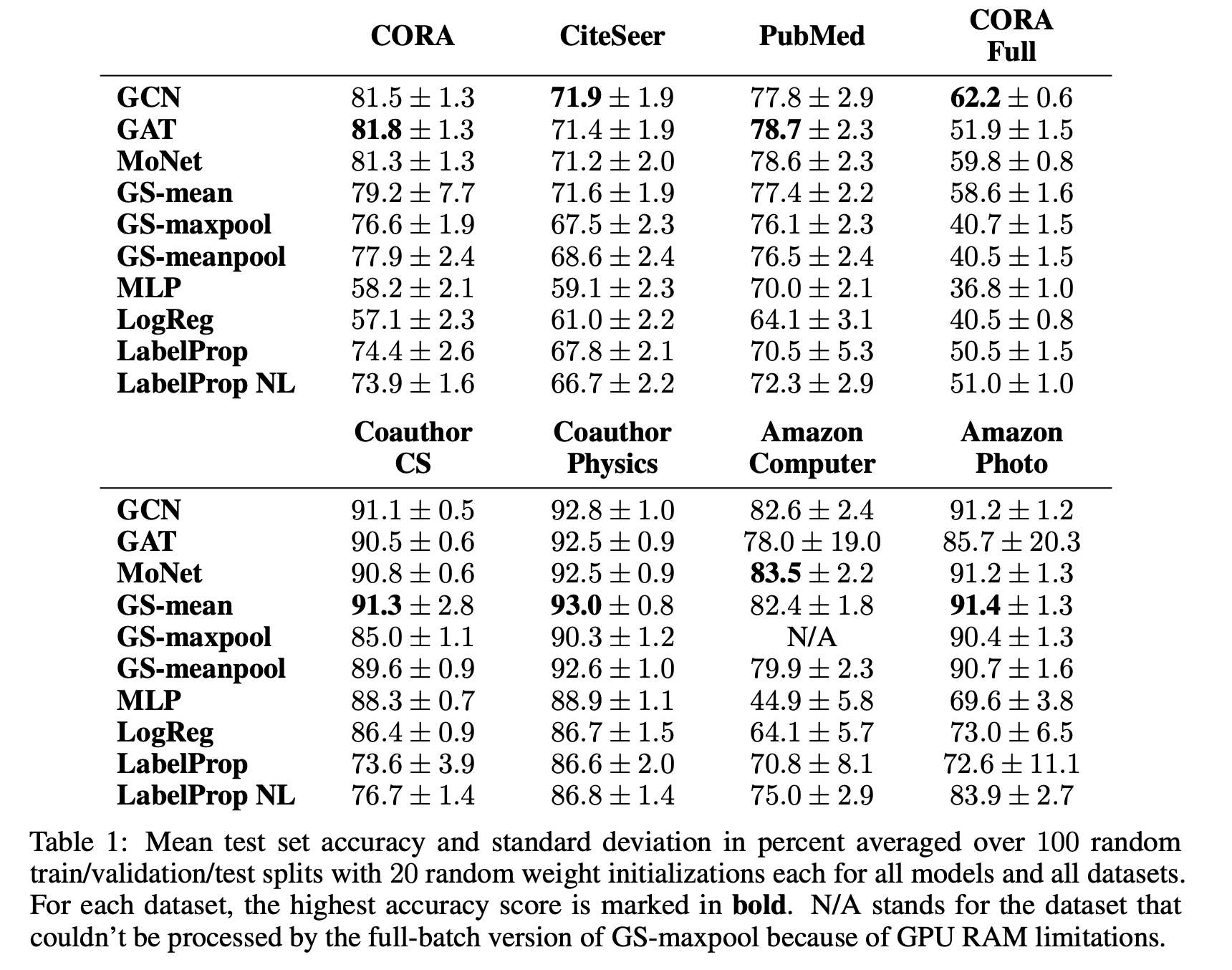

The authors performed an extensive grid search for learning rate, size of the hidden layer, strength of the L2 regularization, and dropout probability. They restricted the random search space to ensure that every model has at most the same given number of trainable parameters. For every model, they picked the hyperparameter configuration that achieved the best average accuracy on Cora and CiteSeer datasets (averaged over 100 random splits and 20 random initialization for each split). In all cases, they used 20 labeled nodes per class as the training set and 30 nodes per class as the validation set, and the rest as the test set.

Meanwhile, they keep the model architectures as they are in the original paper/reference implementations, including:

the type and sequence of layers

choice of activation functions

placement of dropout

choices as to where to apply L2 regularization

the number of attention heads for GAT is fixed to be 8

the number of Gaussian kernels is fixed to be 2

all the models have 2 layers (input features → hidden layer → output layer)

For the rest training choices (optimizer, parameter initialization, learning rate decay, maximum number of training epochs, early stopping criterion, patience and validation frequency), they use the same for all models.

They also consider four baseline models:

Logistic regression

Multilayer perceptron

Label propagation

Normalized laplacian label propagation

The former two do not consider graph structure and the latter two only consider the graph structure and ignore the node attributes.

See below for the experiment result:

Last updated